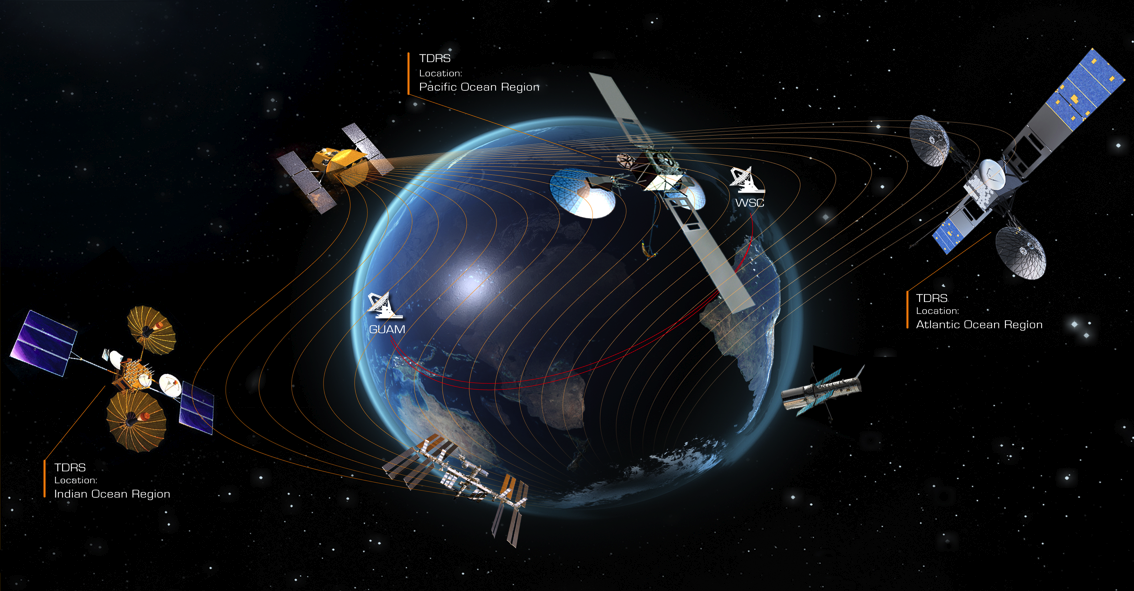

The data you collected from your survey is rarely the precise dataset that you want to analyze. Whether you did a survey of your own house list or a survey of paid panelists, some participants may have answered two or more times or provided responses that should not be included, because they will make your results less representative rather than more representative. Like receiving data from a satellite making observations of the earth, sometimes errors creep in that must be corrected.

For a house list survey, the type of mistake encountered is usually simple: someone started a survey, got interrupted, and restarted it a day or a week or even a month later. You don’t want to double-count one respondent, so you will need to identify and delete all but one of their responses. We typically delete all but the last complete response or the longest partial response, and we identify each individual uniquely by piping into the survey their email address or their contact identifier from the marketing automation software used to send the personalized invitations.

For a survey promoted over social media, the data cleansing may be more difficult. You still might have people start and then restart the survey, but now without a completely unique way to track them. If you are offering a prize or incentive, you may see the survey inundated by spam. For one study that one of our clients promoted on social media, the first 100 responses were all from the same respondent: he answered all questions exactly the same 100 times, but provided a unique Gmail address for each response! One preventative measure is to track social media responses by using a different URL for the survey than the URL that you use in your email campaigns, to make data cleansing of such results easier. A second preventative measure is to not offer monetary incentives via Twitter or Facebook or Snapchat.

For surveys published to one or more panels, the potential problems grow more complicated:

- If you are using multiple panels, do you have a way to ensure that one respondent isn’t taking the same survey multiple times? For a patient survey for a low-incidence condition, we once had the same individual take the survey 6 times, through 6 different panel companies. (We identified him as the same individual based on a subset of demographic questions that, taken together, flagged him for further investigation, which revealed that these responses all belonged to one person.)

- We track respondents across 5 different data-quality measures, derived from the SSI white paper “Best Practices in Online Verification and Quality Control”, with our own secret sauce sprinkled on top. A key insight from the paper: “Because anyone’s attention can wander throughout the course of a … survey, it is important that a minimum of two quality control questions are used and that participants are only flagged as failing if both are answered incorrectly.” Even respondents with the best of intentions make mistakes when taking a survey, such as misreading a question or misinterpreting instructions, perhaps because they lost focus due to an interruption. Removing every single person who makes one mistake actually reduces the representativity of the data.

- Depending on the topic, and the prevalence of open-ended questions, we supplement our assessment of data quality by asking respondents which language they grew up speaking. As Annie Pettit has compellingly documented in her presentation, “Data Quality in Surveys from Non-english Speakers”, overzealous application of quality controls can eliminate responses from “non-English speakers who have valid opinions but a harder time understanding survey questions.”

- While a small percentage of panelists may engage in purposefully fraudulent behavior (0% to 5% for general population studies, depending on the panel), such panelists make up a greater proportion of responses to low-incidence studies. This happens because such panelists lie in order to qualify for these studies (see Kerry Hecht et al’s recent research on research). For instance, for one recent study of a niche market, 61% of the responses were fraudulent. We’ve found that the fraud rate climbs exponentially when increasing the size of the incentive for online studies. For low-incidence work, one key is to offer the same modest incentive structure that you would for a general consumer study.

Regardless of the type of survey that you are fielding, data cleaning can’t wait until the end. If you seek to collect 200 responses only to find the first 100 are fraudulent, you will want to learn that as soon as possible, not when you’re about to close the survey in order to meet the project deadline!

As with questionnaire design, implementing quality control procedures is a mix of art and science. Work carefully to come up with the most accurate results possible – the dataset that you can beam back to your team with confidence.