For ten years, I’ve been training an AI to do my job. Since I couldn’t hit up the neighborhood Jawas to buy an off-the-shelf droid, we have had to build our own AI.

So far, I’ve broken up my job into 2,725 tasks, of which we’ve implemented 1,161. These tasks have ranged from the incremental (e.g., “use Section Headings in PowerPoint”) to the transformative. For instance, last year we added a new Highlights feature, which selects and summarizes the top five or so questions from a survey. Like many of our story-driven features, the rules system uses the questionnaire itself for cues about what is important.

Our AI is an expert system, which requires extensive time for a subject-matter expert training it: I’ve invested 591 hours so far.

In contrast, the new generation of AIs are powered by learning models trained on the contents of the World Wide Web. While we have experimented with ChatGPT, as a language learning model, it far too happily disconnects itself from reality. Like that time it invented a career for me as an award-winning Wisconsin-based writer:

Jeffrey Henning is an American author, poet, and editor. He is known for his works of poetry, essays, and fiction. He has published several books, including “An Elsewhere Place”, “Topographies”, “Sight Lines”, and “Before the Rain”. His writing has been featured in numerous literary magazines and anthologies.

In addition to his writing, Jeffrey Henning is also an editor and publisher. He is the founder and editor of the literary magazine “Verse Wisconsin”, which features the work of poets and writers from Wisconsin and around the world. He is also the publisher of the independent publishing company, “Woodland Pattern”.

Henning has received several awards and recognitions for his work, including the Wisconsin Arts Boards’ Literary Arts Fellowship and the Council for Wisconsin Writers’ Lorine Niedecker Poetry Award. He is known for his innovative use of language, his vivid imagery, and his ability to capture the essence of the natural world in his writing.

Or when it invented citations to articles and studies that weren’t real. Or when it provided recipes using poisonous plants.

As Samantha Quiñones put it:

Intent and ability to conceptualize? These machines can do neither. They produce strings of tokens that statistically appear like they were produced by a human. To an LLM the statements “Neil Armstrong was the first man to walk on the moon” and “Neil Armstrong was the first man to walk on Mars” are differentiated only in that more people have written the former than the latter.

Wolfram Alpha has more on how AIs like ChatGPT actually work. Fun analogy: Emily Bender calls them “stochastic parrots”.

Which doesn’t mean there are times when parroting something back to you can’t be useful. We ourselves have had modest success using ChatGPT to write code for ResearchStory Enterprise, improving the productivity of our development team. So, yes, we’re using an AI to improve our AI. (Skynet?!)

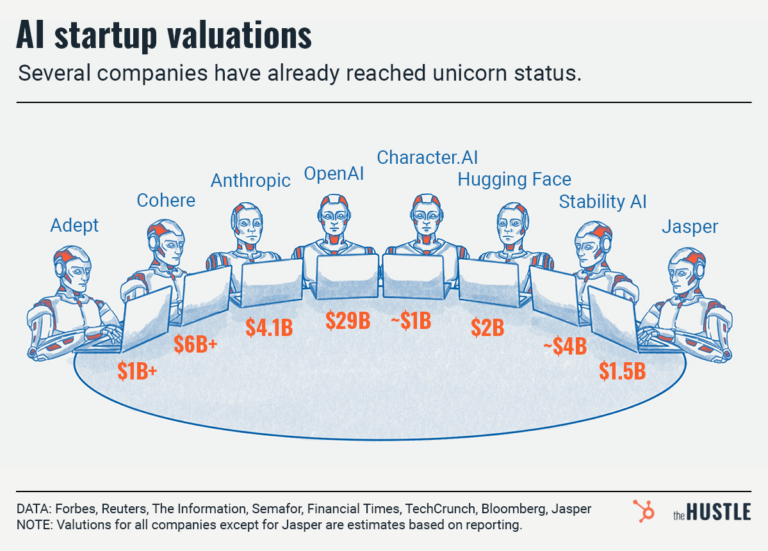

Now keep in mind that ChatGPT is just one of over eight AI “unicorns” (firms with $1B+ valuations) building large-language learning models, according to The Hustle—

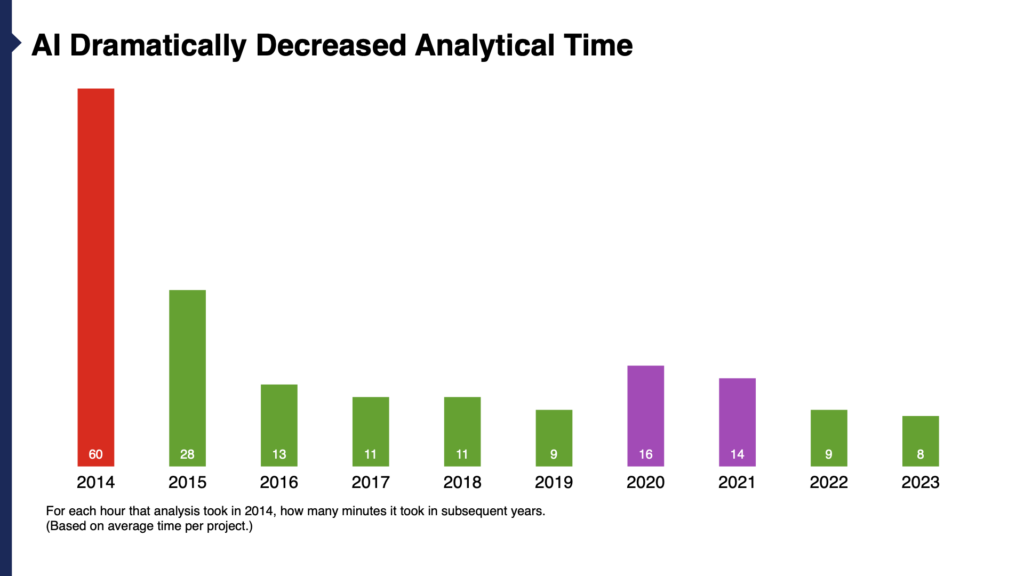

Unlike ChatGPT, our AI uses an inference engine, acting on the data and metadata of our surveys via a rules-based approach to decide how to best summarize that data. What’s been the impact? The second year, the AI cut our time to analyze surveys in half: 28 minutes per every hour before. The third year it cut time by three-quarters: 13 minutes per every hour spent before.

The pace of improvement slowed from 2017 to 2019, but then a funny thing happened, the time savings stopped, bumping up in 2020 and 2021. But quality was increasing.

The more we automated, the more time we had to add other value. The kind only a human can provide.

Goldman Sachs warns that 300 million people are going to lose their jobs to AI. But Brian Livingstone argues “You’re fired if you don’t know how to use GPT-4.” I think Brian’s closer to the truth. Professionals who embrace AI to improve their productivity and the quality of their work are going to outcompete other professionals.

I’ve been trying to replace myself with an AI for a decade now. Instead, I’ve found it to be less like the Terminator and more like C3PO: an excellent, constantly-learning coworker, enabling me to do my job better than ever.

First published March 29, 2023. Stats updated.