In a recent webinar, Aaron Platshon, CEO of player-insights firm Tap Research, said in regard to survey length: “Eight minutes is the new ten minutes.” For quite a few years, the advice has been to shorten surveys to ten minutes, but that’s no longer good enough. Respondents will abandon long surveys without completing them, and now they will abandon them even quicker than they did in the past.

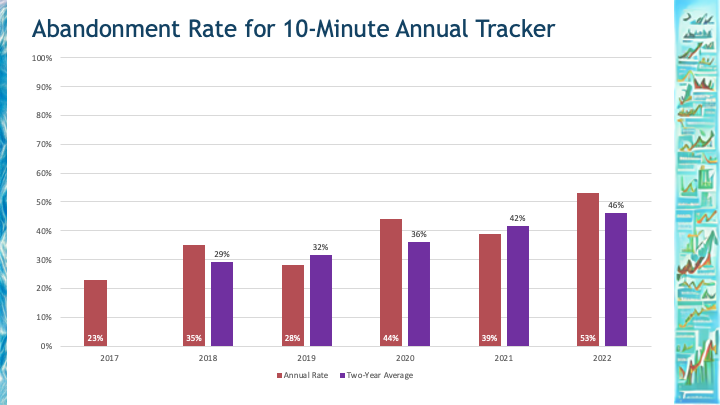

For instance, here is the abandonment rate for an annual tracking study we conduct, by year:

The abandonment rate rose from 23% in 2017 to 53% in 2022. The two-year average evens out the variance and shows a steady increase. (This thought-leadership survey is of a client’s house list of business executives.)

Less patience with long surveys is driven in a large part by the increasing use of mobile devices to take surveys, which grew from 70% of our respondents in 2021 to 77% in 2022.

Complaints about length are up as well. At the end of every survey, we ask respondents, “(Optional.) What, if anything, could we do to improve the quality of this survey? We also welcome any final comments about any aspects of the topics we covered.” Some sample complaints:

- “Make it a little shorter. It was longer than seven minutes.”

- “That it not take 5 minutes more than told!”

- “Don’t say they take 5 min to complete when it takes 10 minutes.”

- “Less minutes on every survey: 5 minutes and under.”

- “Please make more surveys with 5 minutes or less.”

Some sample responses that praised our length:

- “Don’t change anything. It is a good survey. Many of them are too long, but this one was good. Anything longer than 10 minutes, and I start to lose concentration.”

- “It’s fine, at least you don’t say it’ll take 10 minutes or so and you end up tied up for 30 minutes.”

- “One of my favourite surveys I’ve taken so far: it was quick, efficient and user friendly. From someone with a busy lifestyle who will happily fill out a quick 5-minute survey.”

- “Good survey, print big enough to read easily, no stupid repetition which people resent, perfect length. I’d always rather do two short surveys and tell the truth because I am not bored. If it is one too-long repetitive survey people get irritated and they stop giving accurate and true answers.”

Some other comments highlight differences from the estimate:

- “This took me much longer than expected. It said eight minutes, but I believe in using correct grammar and punctuation. I do not mind doing it correctly because that is the way I am accustomed to doing things. “

- “The questions were good, not sure if the length of time listed is the actual time as it appears it took me longer than expected.”

- “Some of the questions are almost repetitive, and it did take longer than the average time listed.”

- “The survey was way longer than the estimated time given.”

In our case, the estimated time to complete is calculated by Alchemer, but it is just an estimate. Because skip patterns and display logic show different questions based on the answers to prior questions, some respondents will see a longer questionnaire than others. And some people are just more fastidious, which makes it impossible to predict for an individual person how long the survey will take.

As an example, consider a survey we fielded this weekend with 18 questions that all respondents saw (no skip patterns). It had a 6% abandonment rate and took a median of 3 minutes and 22 seconds to complete. But there was tremendous variance: from the respondent who took one minute and 6 seconds to the respondent who took 4 and a half hours to complete the survey (presumably they were interrupted!). The 10% of the slowest respondents took a median of 7 minutes to complete the survey. In part, this was because there were three open-ended questions: one mandatory and two optional. Respondents typed a median of 16 words, but the word count ranged from 3 to 166 words. Obviously, if you have a lot to say, you will spend more time than the average respondent.

Since our founding in 2012, we’ve championed shorter surveys and polls, originally aiming for 10-minute experiences at a time when many were advocating for 20- or 25-minute surveys. Now we’re aiming to beat that.

How do you estimate how long your survey will be? As mentioned, some survey tools, such as Alchemer, can predict the median time. Some researchers rely on more manual methods. For instance, Pew Research assigns different types of questions different point values (1 point for a choose one, 2 for a choose-all-that-apply, etc.) and allocates 85 points for a 15-minute questionnaire.

If the survey looks too long, how do you shorten it? Since at least 2015 we’ve been discussing techniques for shortening surveys:

“A sparse survey is one where most questions are not directly asked to an individual respondent. To keep the survey short, from the respondent’s perspective, respondents are skipped over most of the questions through different techniques: verbatim cascades, iceberg matrixes, slices, imported fields.”

For instance, matrixes (AKA grids), using the same scale for row after row, are often disliked by respondents. Panel companies advocate not asking more than five rows in a grid. One way we achieve this is showing a subset of rows, selected at random. Yes, the response rate is lower for these individual items, but the respondent experience is better.

Another technique is to import common demographics. One of our partners supports a rich API that we can use to import information about their panelists into our survey. We pull in their past answers for birth year, gender, postal code, marital status, children in the household, education level, household income, and employment status. We only ask if this information is missing from their profile or if we need updated information for other questions (e.g., current employment status, rather than an answer they’ve provided in the past two months).

What is the ideal survey length?

Five minutes is the new eight minutes.