Probability sampling remains the gold standard for producing results that are representative of target populations. So much so that non-probability methods typically try to emulate or mimic probability sampling where possible:

- Positioning a panel survey as a random sample of panelists

- Using a random sample of the population to select potential respondents (sample matching)

- Using a random sample of intercepted members of a subset of the population

- Apply post-stratification weights

- Reporting sampling error

For budget reasons, non-probability samples are often used in cases where probability samples are the more accurate and reliable tool: measuring the level of incidence, estimating the distributions of variables, or finding the magnitude of association between items. On the other hand, probability samples may be a waste of budget when researching future activities, testing reactions to product concepts, building a tracking study, or seeking qualitative feedback.

SOME KEY RECOMMENDATIONS

- When sourcing sample from non-probability panels, “ask for a description of the steps the organization takes to reduce bias between the sample and the target population of interest,” said George Terhanian, then chief research officer of The NPD Group.

- When evaluating panels, ask whether they use convenience sampling, quota sampling, sample matching or something else. For tracking studies, use panels that provide sample matching or quota sampling using tenure.

- “Be sure to quota any demographic characteristics that matter,” said Reg Baker, executive director of the Market Research Institute International. “Clients sometimes will ask for quotas on age, gender and region, but they also expect the distribution on other demographics like education or income to match the population. They often don’t.”

- When writing the questionnaire, include a few questions with benchmarks from probability samples, to give you another window into the variance reported by your sample. (Keep in mind it can vary widely from benchmark to benchmark.)

- Recognize that sophisticated weighting schemes may not minimize sample bias.

- Don’t report sampling error for non-probability panels but do consider reporting de facto error ranges, broader than sampling error and based on what you observed from the benchmarks.

For a far more thorough treatment of these issues, please see the free 125-page report from the American Association for Public Opinion Research: “Report of theAAPOR Task Force on Non-probability Sampling”.

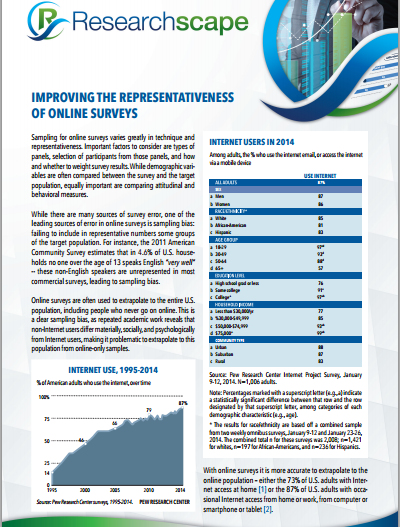

This is an excerpt from the free Researchscape white paper, “Improving the Representativeness of Online Surveys”. Download your own copy now.

This is an excerpt from the free Researchscape white paper, “Improving the Representativeness of Online Surveys”. Download your own copy now.